Introduction: Not All Data Is Created Equal

In today’s corporate landscape, “data-driven” has become a badge of credibility. Organisations pride themselves on basing decisions on facts rather than gut instinct. But data, like any tool, is only as good as its foundation. When the underlying data is flawed or unrepresentative, the conclusions it supports can be dangerously misleading.

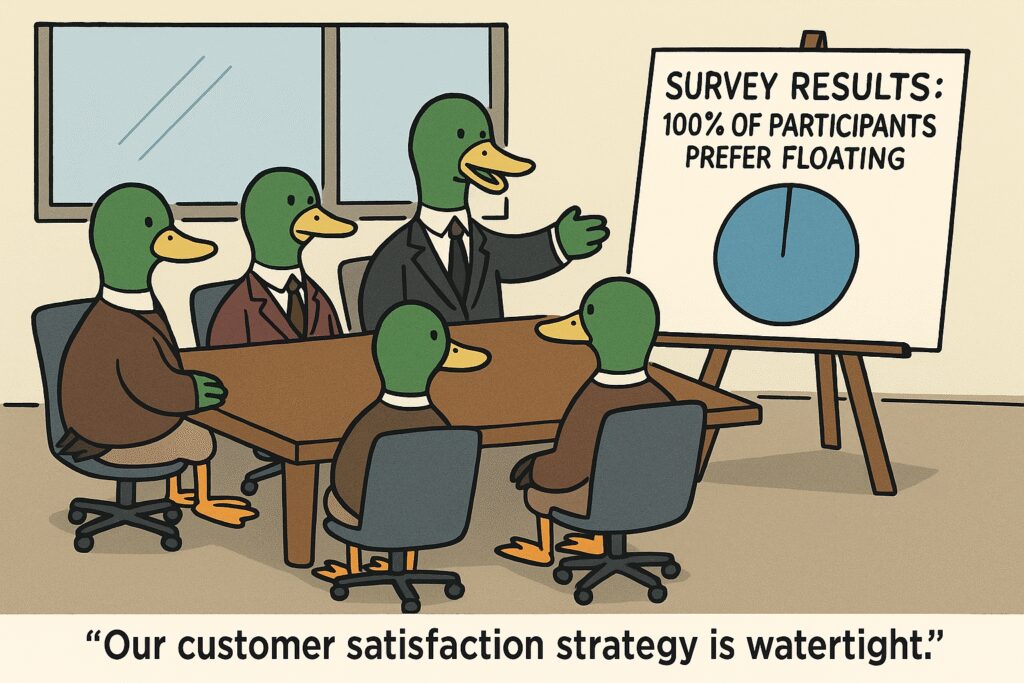

Sample bias is one of the most overlooked threats in data-driven environments. It occurs when the data used for analysis, training, or forecasting does not accurately reflect the population or reality it aims to represent. This bias distorts insights, reinforces blind spots, and leads to poor decisions—even when the analysis appears statistically sound.

In risk management, the danger lies in the illusion of certainty. Leaders may feel confident in dashboards, reports, or models without realising they are acting on skewed information. Sample bias is not just a data science issue—it’s a critical business and strategic risk.

What Is Sample Bias?

Sample bias happens when the data selected for analysis does not represent the wider environment or population. In simple terms, if you ask the wrong group of people—or ignore key segments—you’ll get the wrong answers. And those errors are often magnified when automated systems or high-stakes decisions rely on them.

Common causes of sample bias

Several factors can cause sample bias:

- Convenience sampling – relying on the easiest data to collect rather than the most accurate.

- Exclusion bias – unintentionally leaving out key groups or perspectives.

- Historical bias – using legacy data that reflects outdated behaviours, norms, or imbalances.

In artificial intelligence, for example, training a model on data that mostly features one demographic—say, lighter-skinned faces—can result in poor performance for others. In market research, conducting surveys only online may miss older or less digitally active populations. Internally, if finance or HR models are built using outdated or limited historical data, they may reflect past bias rather than present reality.

Real-World Consequences

Technology fails when data excludes

One high-profile example is AI facial recognition. Systems trained with narrow datasets have misidentified women and people of colour at alarmingly high rates. This has led to wrongful detentions, reputational damage, and regulatory scrutiny. What appears to be a technical glitch is, in reality, a failure of data design.

Misguided strategies from flawed surveys

Surveys and feedback loops are another common pitfall. A product campaign may flop not because of the offer, but because the underlying survey excluded key buyer personas. If only digitally connected users are consulted, your insights ignore those with limited access—often the very customers you aim to reach.

Biased models reinforce biased decisions

Within companies, risk lurks in legacy data. HR tools that predict future talent based on historical promotions may reinforce outdated norms. Financial models based on pre-digital customer behaviour may fail to capture how markets have evolved. In each case, sample bias silently undermines fairness, accuracy, and foresight.

Why It’s a Risk Issue

Sample bias may begin as a subtle flaw in how data is collected, but its consequences quickly spread across the business. When models are built on biased data, their outputs—no matter how accurate they appear—are likely to be wrong in the real world. For risk professionals, this isn’t just a statistical issue—it’s a strategic blind spot.

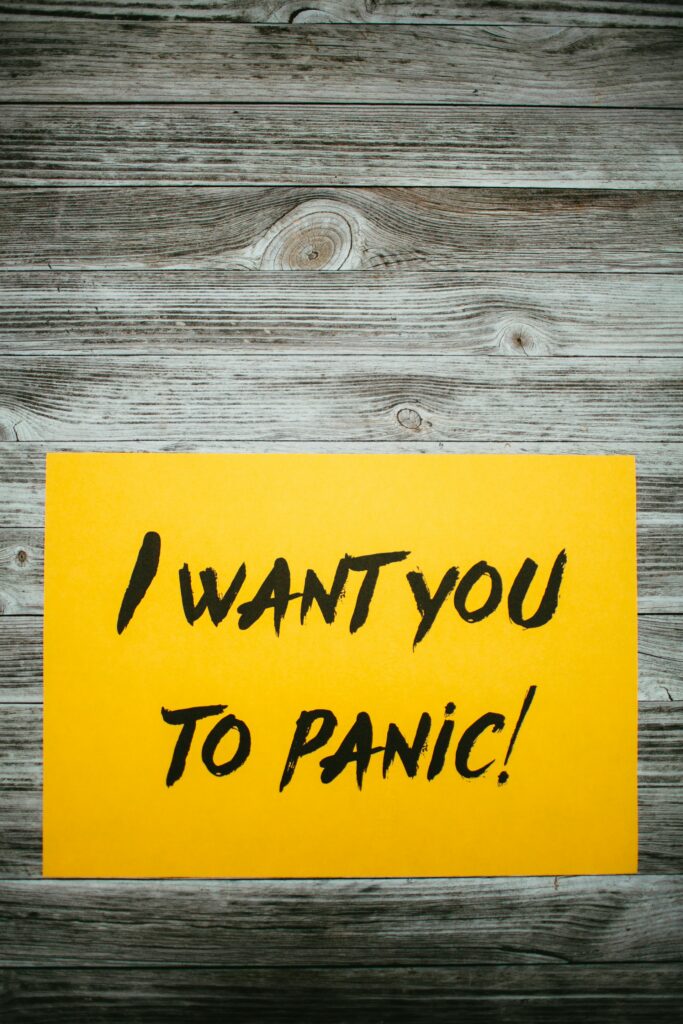

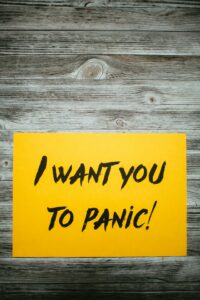

False confidence, real exposure

One of the most damaging outcomes of sample bias is the sense of false confidence it creates. When results are presented with clean visualisations and impressive precision, leaders may assume they are looking at truth. In reality, they’re often acting on distorted assumptions. This creates vulnerabilities across compliance, operations, customer experience and brand reputation.

In high-stakes environments—such as financial services, healthcare, or recruitment—these flawed decisions can cause measurable harm. Failing to detect sample bias can lead to discriminatory systems, mispriced risk, ineffective mitigation strategies, or regulatory breaches. Ethical concerns and reputational fallout often follow.

How to Detect and Correct It

Mitigating sample bias starts with treating it as a shared responsibility—not a task reserved for data scientists alone. It must be embedded in data governance, quality assurance, and strategic planning.

Audit your inputs

Regular audits of datasets are essential. This includes evaluating how data is sourced, whether key groups are underrepresented, and how historical trends may be reinforcing legacy bias. Understanding your data’s origin is as important as understanding its structure.

Design with inclusion in mind

Bias often arises because certain groups are excluded from the outset. Build diversity into data collection by intentionally including different demographics, behaviours, and outliers. If that’s not possible, identify and flag the limitations of your dataset before it feeds critical systems.

Add human judgement to the loop

Introducing “human-in-the-loop” checkpoints allows real-world context to guide model development and deployment. For high-impact decisions, human review can catch anomalies, flag ethical concerns, or ask the right questions—especially when automation misses nuance.

Use synthetic data with caution

Where gaps exist, synthetic data can be used to simulate missing perspectives and test how systems behave across scenarios. While it’s no substitute for real-world inclusion, it can help reduce overfitting to narrow patterns and broaden the robustness of models.

Building Bias-Resistant Risk Culture

Fixing sample bias isn’t only about fixing datasets—it’s about changing the culture around how organisations handle information and risk.

Challenge “perfect” results

Teams should feel empowered to challenge insights that look too neat or too convenient. A clean dashboard may be hiding a messy truth. Questioning assumptions is a strength, not a weakness, especially when it prevents flawed decisions.

Collaborate beyond silos

Bias is more likely to go undetected when models are developed in isolation. Involving cross-functional teams in the design and review process helps surface blind spots and strengthens both performance and integrity. Business users, legal, compliance, and frontline staff all have valuable context that improves model accuracy and relevance.

Make data a governance priority

Ultimately, data quality—including sampling practices—must be treated as a board-level concern. Like financial reporting or cybersecurity, biased data presents reputational and legal risks. Creating clear accountability for data ethics helps shift the mindset from “good enough” to “fit for purpose.”

Conclusion: The Map Is Not the Territory

Sample bias builds beautiful models on shaky ground. The visual outputs may be elegant, and the statistics compelling, but if the underlying data is flawed, the conclusions are unreliable—and potentially dangerous.

Risk managers cannot afford to take clean-looking data at face value. Just because a model performs well in testing does not mean it will hold up in the real world. Without careful attention to sampling, organisations risk making confident decisions based on a narrow or inaccurate view of reality.

At The Risk Station, we help leaders go beyond the illusion of data perfection. Our tools, frameworks and advisory content help organisations detect weak signals, address structural blind spots, and build stronger, more ethical data foundations. Because in risk management, seeing the whole picture isn’t optional—it’s essential.